What is the robots.txt file or why and how to add it to the blog?

There are many bloggers who do not understand Robots.txt options or have never bothered about this option. They think that this option is not a work to keep it empty. Actually, this option plays a very important role in optimizing search engine optimization. And without blogging optimization search engine optimization, blogging will reduce your chances of getting enough visitor on your blog. However, if you activate this option, you can copy the robots.txt file to another without understanding it, it might not be a good idea. For this, you need to know more about this before activating it.

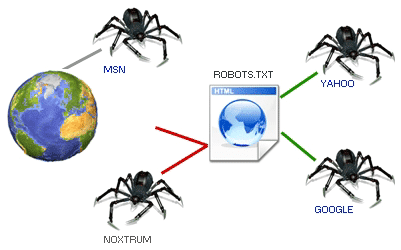

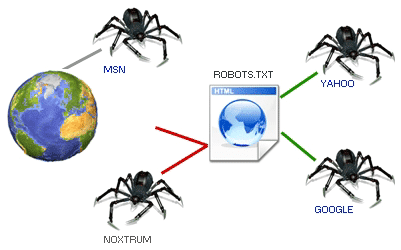

Robots.txt File Key: Every search engine has its own web robot. You might think this is what Rajni Kantar is like the robot of Hindi film. Actually it's not something like this. This is a type of web function, which is called the name of a robot, to check the number of websites in the search engine. And through Robots.txt file, those robots are directed to indicate whether your blog / website will be crawl and index. If you wish, you can allow this robot.txt file to crawl and index the robot and not to give it again. Again, if you wish, you can allow some post crawl and index as you want and can not allow any post to crawl and index.

How does this Robots.txt file work: The robots.txt file is like an airport flight issuer. The way he says when the flight is at the time of the flight, the robots.txt file also explains the new posts of his blog when indexing the robots.txt file is the time for the crawl. As a result, your newly posted new articles easily come to the search engines.

Robots.txt File Key: Every search engine has its own web robot. You might think this is what Rajni Kantar is like the robot of Hindi film. Actually it's not something like this. This is a type of web function, which is called the name of a robot, to check the number of websites in the search engine. And through Robots.txt file, those robots are directed to indicate whether your blog / website will be crawl and index. If you wish, you can allow this robot.txt file to crawl and index the robot and not to give it again. Again, if you wish, you can allow some post crawl and index as you want and can not allow any post to crawl and index.

How does this Robots.txt file work: The robots.txt file is like an airport flight issuer. The way he says when the flight is at the time of the flight, the robots.txt file also explains the new posts of his blog when indexing the robots.txt file is the time for the crawl. As a result, your newly posted new articles easily come to the search engines.

User-agent: Mediapartners-Google: First of all, robots are indicated through the User-agent. Here is Mediapartners-Google's Google Adsense robot. If you have used Google Adsense on your blog, then it should be added. If this option is unallowed, Adsense robots will not get any idea about the advertisement of your blog. If you are not using Google Adsense, then delete this red color line two.

User-agent: * means all types of robots. When you use the * symbol after the user-agent, it means that you are instructing all kinds of robots.

Disallow: / search Indicating how to disallow the keyword with it. That is to say that your blog's search links are not crawl and index. For example, if you see the Label link on your blog, you will find that the search term has the link before each link. For this, the robot is instructed not to crawl the Label links. Because label links are not required to index in the search engines.

Allow: / It is instructed to allow keywords through. This '/' sign means that the robot will crawl and index the home page of your blog. As you can see after submitting Google Webmaster Tools sites, Google Webmaster Tools is always a much more indexed index than your post. Not really, it's counting your home page too.

Sitemap: >When you post a new post it will tell robots to index new posts. The default blogger has a Sitemap. But by default, more than 25 posts do not index. This is why adding this Sitemap link to the Robots.txt file as well as submitting to Google Webmaster Tools.

Read this to better understand it User-agent:It is directed through robots.

Disallow:

This means that no directory will be crawled by any robot after that. Note: If Disallow is not given any directory then Disallow will work as Allow.

* This symbol implies all kinds of robots.

Allow:

This means that the Directory will then crawl the robot.

How to add to the blog:

From the Blogger dashboard, click Settings> Search Preference.

Then click the Edit button for Custom Robots.txt. The following figure -

Then Enable custom robots.txt content.

Click Yes to see a box. Copy and paste the above code into this box.

Then come out by clicking on Save. That's All.

Any problems ask me.

Thank u everyone.

This means that no directory will be crawled by any robot after that. Note: If Disallow is not given any directory then Disallow will work as Allow.

* This symbol implies all kinds of robots.

This means that the Directory will then crawl the robot.

How to add to the blog:

From the Blogger dashboard, click Settings> Search Preference.

Then click the Edit button for Custom Robots.txt. The following figure -

Then Enable custom robots.txt content.

Click Yes to see a box. Copy and paste the above code into this box.

Then come out by clicking on Save. That's All.

Any problems ask me.

Thank u everyone.

What is the robots.txt file or why and how to add it to the blog?

Reviewed by Mahmud

on

September 26, 2017

Rating:

Reviewed by Mahmud

on

September 26, 2017

Rating:

Reviewed by Mahmud

on

September 26, 2017

Rating:

Reviewed by Mahmud

on

September 26, 2017

Rating:

No comments: